It’s Friday night, it’s been a long week, and I am rewarding myself with sushi from my favorite spot. I place my usual order for a hosomaki combo, but since I don’t like the tuna roll, under special instructions, I start to write “please swa—” and my predictive text feature assumes that the rest of the sentence will be “p tuna roll with spicy salmon.” My phone has learned my preferences; this is machine learning at work.

What is Machine Learning?

Machine learning is a part of the umbrella of artificial intelligence, specifically related to how systems can be constructed and used to analyze patterns from previous example data to predict findings in future situations (Fradkov, 2020; Müller & Guido, 2016). Machine learning is separated into two categories. Supervised machine learning is when the algorithm uses inputs to find a given output and learns the relationships between inputs and outputs (Müller & Guido, 2016). For example, having email sorted into different folders based on sender is an example of supervised machine learning. Unsupervised machine learning allows algorithms to look at relationships between the inputs (Müller & Guido, 2016). A common example of unsupervised machine learning is when a website shows other items that consumers have bought. The website has learned that there may be a relationship between these items (Müller & Guido, 2016).

From a consumer perspective, machine learning makes life feel more catered. Apps on smartphone help commuters avoid traffic that may not have occurred yet, because the background algorithm can predict where accidents and traffic congestion are likely to occur later in the day. Banks can monitor credit card purchases and let consumers know if the account activity that deviates from their usual pattern. Email and calendar apps inform their users of a busy upcoming week and make suggestions to better manage the user’s time or to create healthy boundaries. Each of these solutions is created based on previous patterns generated by the user or others like them.

Machine Learning in Higher Education

Machine learning is also built into software and apps used in student affairs. The most common example is in retention based software that identify students who are less likely to persist based indicators from the institution’s learning management system (i.e. clicks, time spent on assignments compared to classmates, promptness at reading messages, number of characters in discussion board posts), their demographics (i.e. high school GPA, ACT/SAT score, gender, race/ethnicity, first-generation status, Pell-eligibility, college, major) and other collectable data such as grades compared to classmates, attendance, course load, and previous grades (Mathews, 2018). Using five to ten years of previous retention data and over 100 indicators, the software constructs a model to indicate whether or not a student will retain. As the software scans current student engagement in the classroom, tutoring, co-curricular event attendance, it compares their engagement to patterns from the past and can predict the likelihood of a student’s persistence (Brasca et al., 2022; Mathews, 2018). In a recent article (Cliburn, 2022), University of Nevada Las Vegas (UNLV), Georgia State University, and Elon University spoke about incorporating natural language processing (NLP), a form of unsupervised machine learning, with their chatbots to answer students’ questions 24/7. Chatbots use NLP to understand users’ questions and use websites or other information to assist the user (Géron, 2022; Müller & Guido, 2016). The chatbots can also aggregate commonly asked questions to help administrators better understand student needs (Cliburn, 2022). In the future, it is likely that machine learning will help higher education improve matriculation through improved targeting to prospective students, increasing advising by looking at which schedules led to success for students, and promoting student engagement by learning what types of activities appeal to different kinds of students. Each of these suggestions are likely to help students find deeper meaning and higher likelihood of success during their college experience.

Machine Learning Workflow

While the future of machine learning for higher education, and specifically student affairs, is bright, machine learning requires student affairs professionals work with the software to ensure that the answers makes sense and are used in an ethical and meaningful way. To do this, student affairs professionals must become familiar with a machine learning workflow. Géron (2022) introduced an eight step machine learning workflow:

- Look at the big picture.

- Get the data.

- Discover and visualize the data to gain insights.

- Prepare the data for Machine Learning algorithms.

- Select a model and train it.

- Fine-tune your model.

- Present your solution.

- Launch, monitor, and maintain your system. (Chapter 1)

In each step of the workflow, humans must make choices and determine the best solutions so that the algorithm can provide accurate, ethical, and meaningful results. Steps 1 and 2 require a human to frame the question and choose the correct dataset(s) for the algorithm to learn from. Once the data has been selected, it must be cleaned, and previsualized to remove extraneous data. Determining the method for data cleaning and what data should be removed is another decision point. In the step 5, humans must select a model. There are many models in machine learning, and subcategories within each model type, each with its own biases and assumptions (Géron, 2022; Gill et al., 2020; Müller & Guido, 2016). Humans must choose the best model to fit their questions. In steps 6 and 7 humans must decide when the model is working well enough to answer the question. In step 8, humans must decide what data should be shared with different people. Machine learning is truly a partnership between a computer and humans. For machine learning to work well and do good, the people must consider the ethical questions and guidelines.

Ethics and Machine Learning

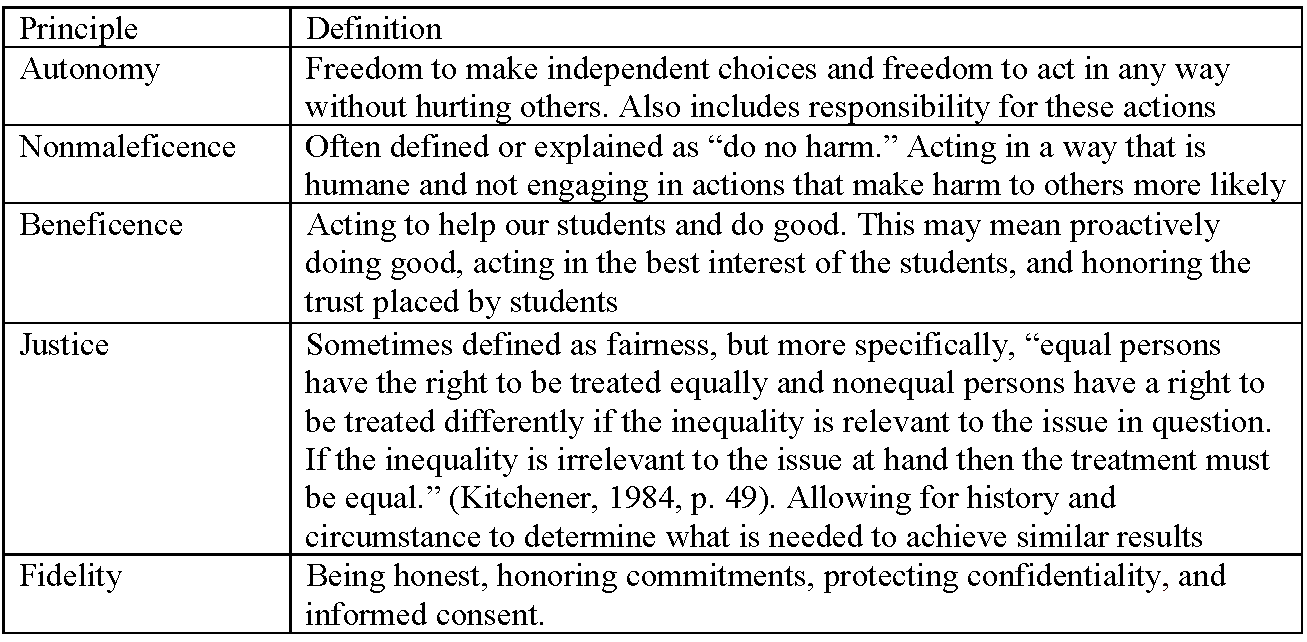

When looking to consider ethical dilemmas, student affairs often uses Kitchener’s (1984) Five Ethical Principles for Helping Professionals: Autonomy, Nonmaleficence, Beneficence, Justice, and Fidelity as a guiding framework. These principles, as defined in Table 1, promote the shared values of students affairs, but they values are not discrete. In fact, there is friction within the principles (i.e. what may seem like justice to one person may not feel that way to another) and between the principles (i.e. more autonomy can lead to a decrease in beneficence). Even with these considerations, the principles can serve as a guides for student affairs professionals seeking help to work with students. When considering adopting a machine learning software, students are considered both as people and as the data.

Table 1: Kitchener’s Ethical Principles for Helping Professionals (1984)

The consideration of ethics in machine learning is a relatively new question. Currently there are no regulations on how data analysts can deploy machine learning algorithms (Hall et al., 2021; Lo Piano, 2020; Pazzanese, 2020). Guidelines such as Kitchener’s Ethical Principles for Helping Professionals can help bridge the gap in the absence of laws or policies, and provide guidance for how to adapt the machine learning workflow to provide ethical and meaningful information.

Autonomy

As a student affairs professional, autonomy is often defined as a student’s freedom to make their own choices and take responsibility for their choices, without infringing on other’s freedoms (Kitchener, 1984). Autonomy for machine learning within higher education also consider the role of choice: choosing the model and deploying the model.

Choosing a model

As mentioned earlier, there are many different machine learning models (i.e. classification, regression, NLP) and each model has its own mathematical biases and assumptions. Models that may work well in one scenario may not be suited to another scenario, based on the data and the questions (Fradkov, 2020; Géron, 2022; Müller & Guido, 2016; Singh et al., 2016). To respect autonomy of the machine learning process, student affairs professional need to ask questions about the choice of model being put into place and take responsibility for the decisions the model is making. Like all mathematical models, machine learning models can give false positive and false negative results. Student affairs professional must understand the likelihood of false results and the meaning of all results (Müller & Guido, 2016; Pazzanese, 2020; Singh et al., 2016) They also need to understand what policies and procedures would be enacted by a false result and how that would affect the student.

Deploying a model

When the model is deployed, staff who have the appropriate access will see insights about students and recommendations for action (or even automated actions). The result of deploying machine learning may encourage staff to be advisory or prescriptive (Cubarrubia & Le, 2019). With respect to autonomy, student affairs professionals need to allow the student to make their own choices, even if those choices conflict with the insights being given. As an example, if a machine learning model says that a student is unlikely to retain at the institution, the student still must choose not to return to the institution to not retain. The data can inform staff that interventions may be necessary, but the data should not prescribe that the student can no longer receive help from the institution, since they are unlikely to retain. This is the difference between letting the data advise and using the insights from a machine learning algorithm as a prescription.

Nonmaleficence

Like other helping professionals, student affairs professionals try to abide by the definition of nonmaleficence as do no harm. However, machine learning can lead to harm being done, particularly if humans do not infuse ethics into the machine learning workflow (Hall et al., 2021; Wiens et al., 2019). The place where harm is most likely in the machine learning workflow is in choosing the data, steps 2 and 3, that will be used by the machine learning model.

Getting the data

Machine learning occurs as a model learns from the provided data (known as training data) but humans determine which datasets are given to the machine learning model as training data (Müller & Guido, 2016). When choosing training data, student affairs professionals must ensure that the data is accurate and provides a wide variety of examples (Géron, 2022; Müller & Guido, 2016). They also must consider what bias may be present in the training data. For example if the training data came from a time when quotas were a standard part of the admissions processes, the model would learn to incorporate quotas to predict future admissions processes (Gill et al., 2020; Hall et al., 2021; Thelin, 2011). If discriminatory practices, implicitly or explicitly, are part of the training data, they will often be learned and replicated in the algorithm.

Visualizing and exploring the data

The importance of not teaching a machine learning model to perpetuate biases cannot be overstated when thinking from an ethical lens and focusing on nonmaleficence. While computers, and thus machine learning, are seen as neutral, mathematical, and logical, machine learning learns from the example data (Cubarrubia & Le, 2019; Pazzanese, 2020; Wiens et al., 2019). If the example data has bias with in it, the machine will learn to use that bias within its predictions. Because the machine learning model is seen as logic based, the replication of these biases becomes more accepted as fact rather than as bias (Cubarrubia & Le, 2019; Pazzanese, 2020; Wiens et al., 2019). This exacerbates the importance of choosing the correct data with multiple examples to teach and train the model. During the visualization and exploration of the workflow, humans can explore that the data with clear eyes prior to training the model, and prevent the model from learning biases.

Beneficence

Beneficence is the idea of acting to help others and acting within their best interests. Thinking through the intersection of machine learning and student affairs ethics, the primary overlap is the first step of the machine learning workflow, look at the big picture and at the second to last step, present a solution.

Look at the Big Picture

During this step, the machine learning workflow considers what question should be asked, why ask that question, and if machine learning is the right solution to that question (Géron, 2022; Müller & Guido, 2016). Students share their data with the university and expect the university professional to be responsible stewards of their data. Choosing to frame machine learning from questions that will help others succeed or prevent others from failure is acting from a place of beneficence. When deciding to adopt machine learning, it is important to ask questions: who will the results of this project serve? Will anyone be harmed by the results of this model? Will this model help us do greater good for our students? Will this model put any of our students in harm’s way? Does this project fit with our institutional values? (Cubarrubia & Le, 2019; Hall et al., 2021; Wiens et al., 2019)

Presenting a Solution

At the step of presenting a solution in the machine learning workflow, the analyst has determined which question to ask, model to choose, and how well the model will predict the answers (Géron, 2022; Müller & Guido, 2016). When presenting a solution, student affairs professional must share their analysis with other campus partners and explain why the model’s solution will help students succeed. The solution must be shared in a way that is understandable, answers other stakeholder’s questions, and accurately describes any issues within the model. No model can perfectly predict data, so presenting the solution teaches other colleagues how to interpret the data to act in the best interest of the student and use the data well.

Justice

As a helping professional, the concept of justice is closer to the idea of equity than equality. The idea is to provide similar results for everyone, understanding the method to obtain those results requires different strategies (Kitchener, 1984). In the machine workflow the step of launching monitoring and maintaining your system are points where the concept of justice can be easily embedded.

Launching Monitoring and Maintaining your System

Student affairs professionals want all students to retain, to be academically successful, graduate on time and find a job. Machine learning can help students better understand which inputs (or combination of inputs) are most responsible for achieving specific outcomes, using the subfield of machine learning known as Explainable AI (Gunning et al., 2019). In Explainable AI, the focus is on what inputs are controlling the model and which inputs if changed will most affect the outcome. As a model becomes more fine-tuned and launched, data analysts can work with student affairs professionals to identify those key areas where small shifts could have large impacts. Explainable AI can help student affairs professionals identify actions that students can take to help them succeed (Char et al., 2018; Gunning et al., 2019; Wiens et al., 2019). Maintaining the model is required to keep finding new solutions or combinations of solutions to help students succeed.

Fidelity

The idea of fidelity reminds students affairs professionals to follow through and honor their commitments to students, while maintaining student data confidentiality. When thinking through the machine learning workflow, student affairs professionals must consider regulations regarding student data, such as FERPA and HIPAA.

FERPA

College students have additional privacy protections that govern the information that may be given out about them. Most often cited is the Family Educational Rights and Privacy Act (FERPA) (20 U.S.C. § 1232g; 34 CFR Part 99). This law applies to all schools that receive fund from the U.S. Department of Education. When a student comes of age (defined as 18 years old) or attends a school beyond a high school level, they have the right to view and request corrections to their educational records. The student’s educational record is considered private except in specific conditions or directory information. The most common specific conditions are “School officials with legitimate educational interest, Appropriate parties in connection with financial aid to a student; Organizations conducting certain studies for or on behalf of the school; [and] Appropriate officials in cases of health and safety emergencies.” (FERPA, 2021) . Directory information include the student’s name, address, telephone number, date and place of birth, honors and awards, and dates of attendance and may be disclosed without a students’ consent, although a student may request that a school not disclose specific directory information. With regards to machine learning, this means that student data can be used within a machine learning context, but the study must be conducted on behalf of the school. Additionally, data may need to be removed if a student has requested that the school not disclose directory information to outside organizations.

HIPAA

College students are also protected by the Health Information Portability and Accountability Act ((Health Insurance Portability and Accountability Act of 1996 (HIPAA) | CDC, 2022), 29 U.S.C. § 1181 et seq.) which protects information related to health status, healthcare, or healthcare payment, and prevents this data from being shared or, at times, combined with other data regarding the same individual. While there are clauses within HIPAA to allow data to be used by business associates for data analysis, personal healthcare data is subject to different regulations when considering machine learning. In student affairs work this may include data about student disability, student usage of counseling, and student usage of health services(Wiens et al., 2019).

Final Thoughts

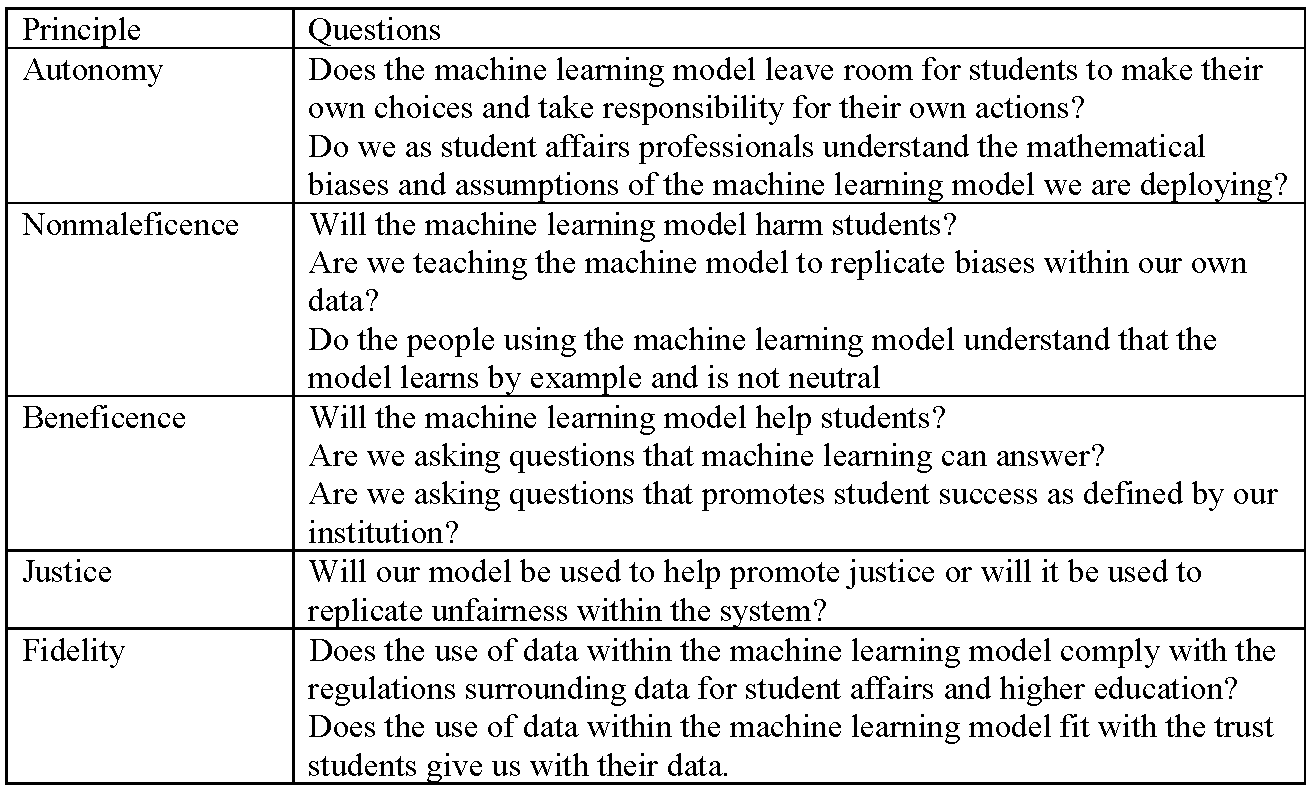

Machine learning models are powerful software programs and applications that can be used by student affairs professionals to improve student success, if student affairs professionals understand how the models function. Student affairs professionals bear the weight of asking questions to ensure that the models are created, deployed, and maintained with mind towards ethics. Student affairs professionals do not need to be data analysts to ask questions about the machine learning models that will facilitate in helping students. Examples of these questions, divided by principle are shown in Table 2. By asking these type of questions, student affairs professionals embed the principles of our profession as helping professionals into our new non-human partners.

Table 2: Ethical Questions for Machine Learning Workflows

Author

Dr. Rebecca Goldstein serves as the Director of Assessment and Research at Florida Atlantic University. In this role, she leads strategic planning at the divisional level and oversees strategic planning and assessment efforts for 20 departments. She also leads analytics and dashboard use for the Division of Student Affairs and collaborates with Academic Affairs to determine Student Success Metrics. Rebecca earned her Doctor of Philosophy in Higher Education Leadership from Florida Atlantic University and her dissertation, “How Student Affairs Directors Use Assessment to Make Changes” provided a focus on the actions directors take in order to put their data into practices to improve their organizations. She also has an M.Ed in Higher Education and Student Affairs from the University of South Carolina and a B.S. in Environmental Science from Indiana University. Her professional interests include big data analytics, assessment use and practice, organizational leadership and dynamics, and business approaches to student affairs.

Dr. Rebecca Goldstein serves as the Director of Assessment and Research at Florida Atlantic University. In this role, she leads strategic planning at the divisional level and oversees strategic planning and assessment efforts for 20 departments. She also leads analytics and dashboard use for the Division of Student Affairs and collaborates with Academic Affairs to determine Student Success Metrics. Rebecca earned her Doctor of Philosophy in Higher Education Leadership from Florida Atlantic University and her dissertation, “How Student Affairs Directors Use Assessment to Make Changes” provided a focus on the actions directors take in order to put their data into practices to improve their organizations. She also has an M.Ed in Higher Education and Student Affairs from the University of South Carolina and a B.S. in Environmental Science from Indiana University. Her professional interests include big data analytics, assessment use and practice, organizational leadership and dynamics, and business approaches to student affairs.

Works Cited

Brasca, C., Marya, V., Krishman, C., Lam, M., & Law, J. (2022, April 7). Machine learning in higher education | McKinsey. https://www.mckinsey.com/industries/education/our-insights/using-machine-learning-to-improve-student-success-in-higher-education

Char, D. S., Shah, N. H., & Magnus, D. (2018). Implementing Machine Learning in Health Care—Addressing Ethical Challenges. The New England Journal of Medicine, 378(11), 981–983. https://doi.org/10.1056/NEJMp1714229

Cliburn, E. (2022, April 18). Universities Embrace Artificial Intelligence to Support Students. INSIGHT Into Diversity. https://www.insightintodiversity.com/universities-embrace-artificial-intelligence-to-support-students/

Cubarrubia, A., & Le, M. (2019). Navigating Institutional Culture in Ensuring the Ethical Use of Data in Support of Student Success and Institutional Effectiveness. New Directions for Institutional Research, 2019(183), 17–26. https://doi.org/10.1002/ir.20309

Family Educational Rights and Privacy Act (FERPA). (2021, August 25). [Guides]. US Department of Education (ED). https://www2.ed.gov/policy/gen/guid/fpco/ferpa/index.html

Fradkov, A. L. (2020). Early History of Machine Learning. IFAC-PapersOnLine, 53(2), 1385–1390. https://doi.org/10.1016/j.ifacol.2020.12.1888

Géron, A. (2022). Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow: Concepts, tools, and techniques to build intelligent systems (3rd ed). O’Reilly.

Gill, N., Hall, P., Montgomery, K., & Schmidt, N. (2020). A Responsible Machine Learning Workflow with Focus on Interpretable Models, Post-hoc Explanation, and Discrimination Testing. Information, 11(3), Article 3. https://doi.org/10.3390/info11030137

Gunning, D., Stefik, M., Choi, J., Miller, T., Stumpf, S., & Yang, G.-Z. (2019). XAI—Explainable artificial intelligence. Science Robotics, 4(37), eaay7120. https://doi.org/10.1126/scirobotics.aay7120

Hall, P., Gill, N., & Cox, B. (2021). Responsible Machine Learning. O’Reilly Media, Inc. https://learning.oreilly.com/library/view/responsible-machine-learning/9781492090878/

Health Insurance Portability and Accountability Act of 1996 (HIPAA) | CDC. (2022, June 28). https://www.cdc.gov/phlp/publications/topic/hipaa.html

Kitchener, K. S. (1984). Intuition, Critical Evaluation and Ethical Principles: The Foundation for Ethical Decisions in Counseling Psychology. https://doi.org/10.1177/0011000084123005

Lo Piano, S. (2020). Ethical principles in machine learning and artificial intelligence: Cases from the field and possible ways forward. Humanities and Social Sciences Communications, 7(1), Article 1. https://doi.org/10.1057/s41599-020-0501-9

Mathews, J. (2018, October 3). Artificial intelligence in student success and beyond. https://eab.com/insights/blogs/student-success/artificial-intelligence-in-student-success-and-beyond/

Müller, A. C., & Guido, S. (2016). Introduction to machine learning with Python: A guide for data scientists (First edition). O’Reilly Media, Inc.

Pazzanese, C. (2020, October 26). Ethical concerns mount as AI takes bigger decision-making role. Harvard Gazette. https://news.harvard.edu/gazette/story/2020/10/ethical-concerns-mount-as-ai-takes-bigger-decision-making-role/

Singh, A., Thakur, N., & Sharma, A. (2016). A review of supervised machine learning algorithms. 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), 1310–1315.

Thelin, J. R. (2011). A history of american higher education. JHU Press.

Wiens, J, Saria, S., Sendak, M., Ghassemi, M., Liu, V. X., Doshi-Velez, F., Jung, K., Heller, K., Kale, D., Saeed, M., Ossorio, P. N., Thadaney-Israni, S., & Goldenberg, A. (2019). Do no harm: A roadmap for responsible machine learning for health care. Nature Medicine, 25(9), 1337–1340. https://doi.org/10.1038/s41591-019-0548-6

No comments yet.