The concept for this proposed framework was born from what initially seemed a simple question asked of an assessment practitioner: can a learning outcome at a given Bloom’s level be assessed via a multiple choice quiz? Before answering this question, it is necessary to examine related literature.

Cognitive learning theory has grounded academic program design for decades, particularly since the advent of Bloom’s (1956) Taxonomy. Identifying and appropriately scaffolding the complexity of learning outcomes as students progress through each course of a program is critical when shepherding them through a new discipline. Assessments are designed to align with goal criteria and measure student learning. While identifying the Bloom’s level of a learning outcome gives a general idea of its nature, it does not identify its relative challenge within the spectrum of the Bloom’s level.

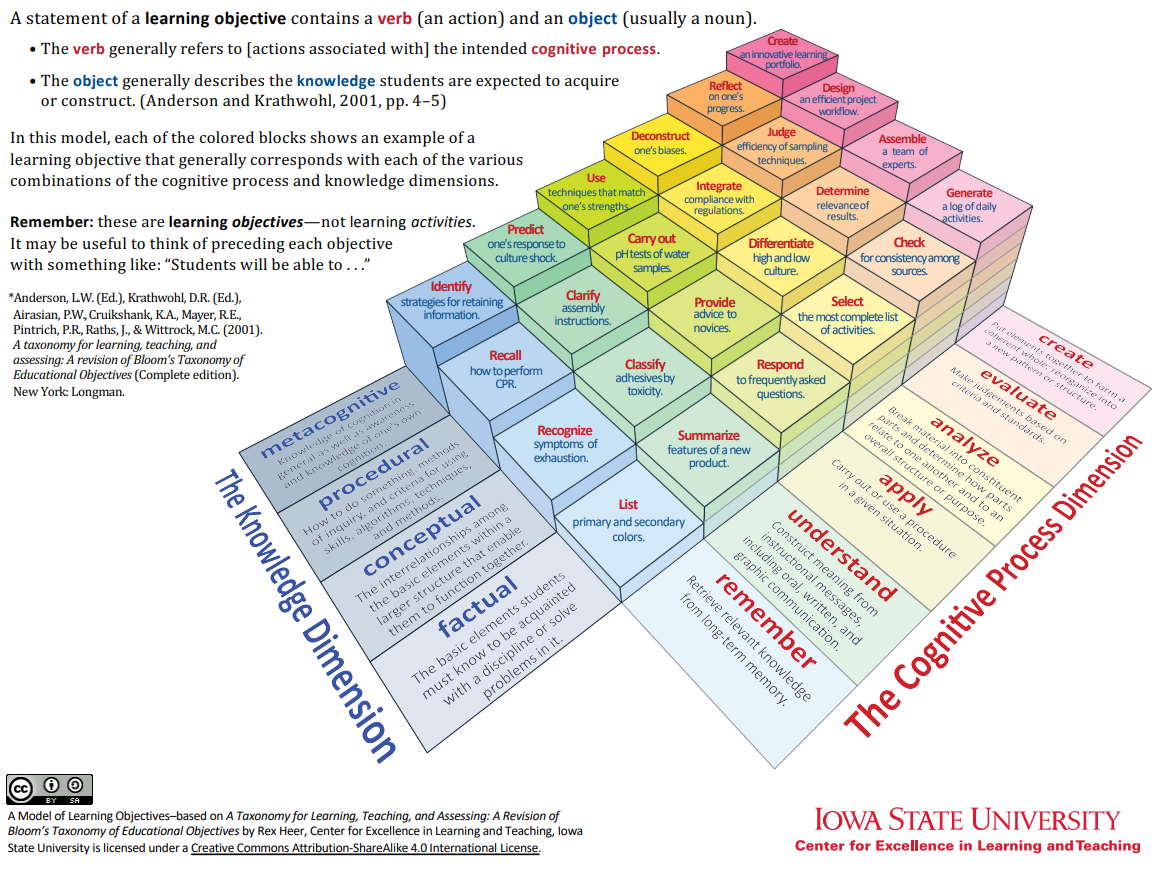

For example, although assessments asking students to brainstorm a list of ideas for dinner and develop a policy proposal to govern international trade are both at the Create level, the latter is much more difficult than the former, which their associated learning outcomes should likewise reflect. Anderson et al. (2001) reinterpreted Bloom’s (1956) Taxonomy to distinguish the Cognitive Process Dimension, representing the six Bloom’s levels educators are commonly familiar with, from their proposed Knowledge Dimension, which attempts to outline types of knowledge common among all Bloom’s levels ranging from the concrete to the abstract.

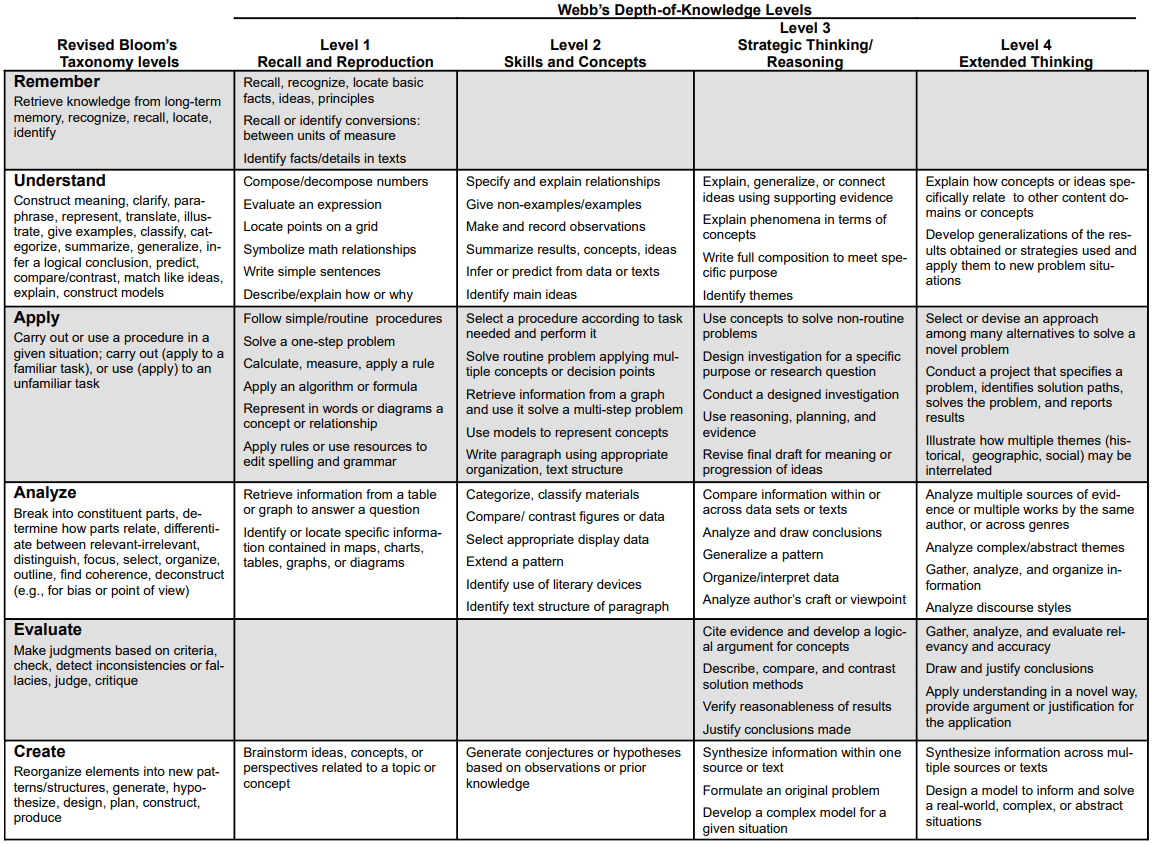

The Knowledge Dimension levels include the Factual, Conceptual, Procedural, and Metacognitive, which are overlayed with Bloom’s levels in Appendix A (Anderson et al., 2001; Iowa State University, 2012). As such, linguistic precision of learning outcome intent is essential to ensure clarity and accuracy. Modeling by Webb (1997, 1999) introduced depth-of-knowledge levels as an alternative framework to evaluate how deeply a student must think to complete a task. This is packaged with an additional assertion that a student may fluidly move between Bloom’s learning levels throughout task completion. Hess et al. (2009) combined Bloom’s (1956) and Webb’s (1997, 1999) models by creating the Cognitive Rigor Matrix to clearly illustrate how different depths-of-knowledge would manifest at each of Bloom’s cognitive learning levels, see Appendix B.

The matrix outlines assessment aspects or goals to be accomplished in each combination. The detail of the matrix is more comprehensive than the work of Anderson et al. (2001) as it more fully fleshes out examples of learning undertaken at each depth-of-knowledge. However, neither Hess et al. (2009) or Anderson et al. (2001) attempt to outline examples of assessment deliverables and the required level of faculty involvement to appropriately evaluate them for each permutation, leaving it up to the interpretation of educators. For example, when considering if a learning outcome at a given Bloom’s level and Knowledge Dimension can be appropriately assessed through a short answer test, the answer is not always immediately obvious. While many styles of assessment mechanisms can be adapted to service multiple levels of Bloom’s, their flexibility has limitations.

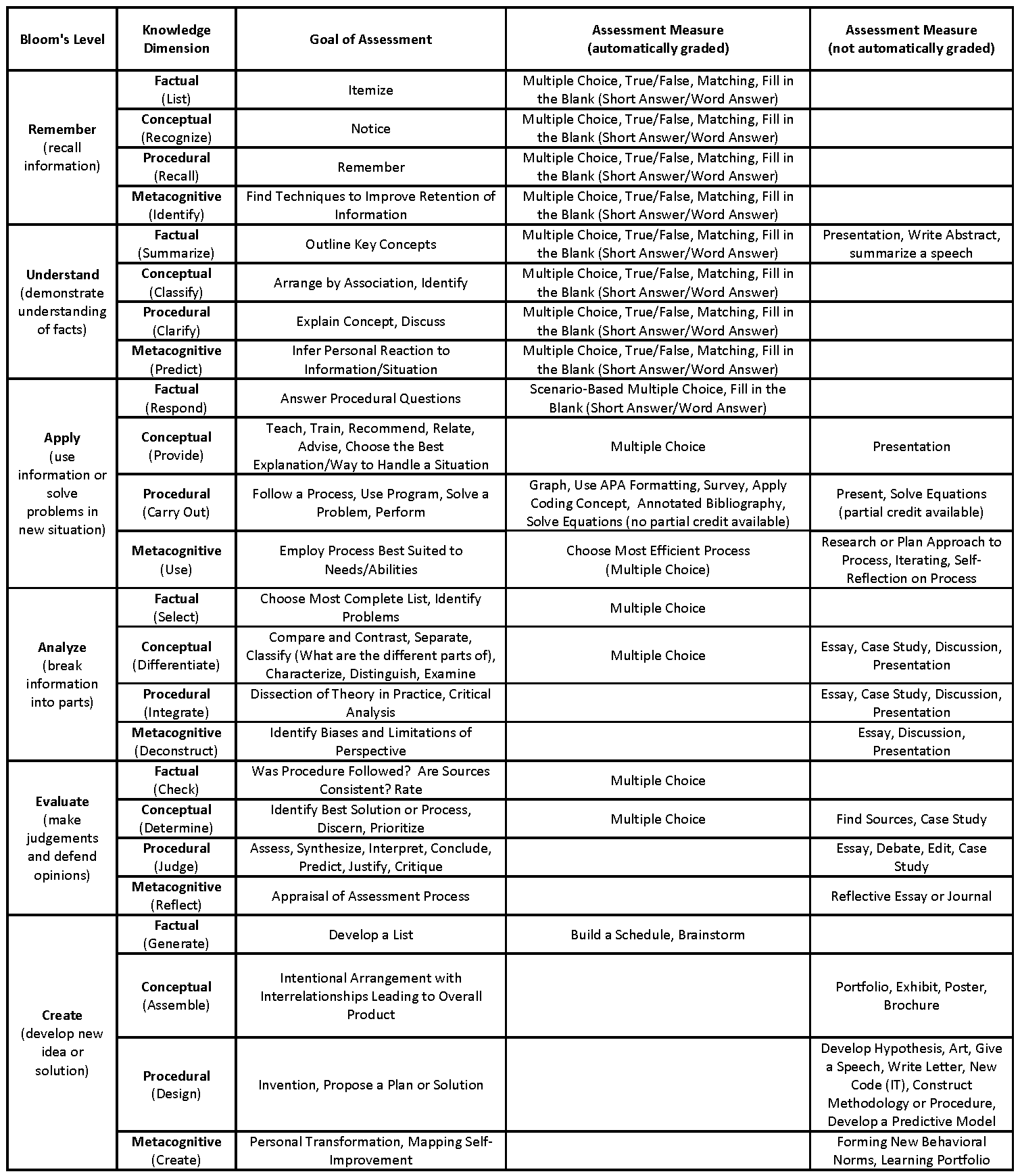

The proposed framework attempts to demystify the application of learning theory in assessment design. Revisiting our original question regarding the assessment of a learning outcome via a multiple choice quiz, the answer required looking beyond the Bloom’s level and into its Knowledge Dimension. For example, some learning outcomes written at the Analyze level are suitable for multiple choice tests while others are not depending on Knowledge Dimension complexity. However, this determination was not possible solely by looking at existing theoretical models; it required extra effort to connect specific assessment mechanisms to Bloom’s levels and associated Knowledge Dimensions. Without constructing the grand scope of how theory applies to assessment design, interpretations may vary and inconsistencies are not readily apparent. This is even more challenging for those uninitiated in educational theory, which is often the case with higher education faculty. This tool provides guidance to faculty and course designers when attempting to identify the nature of course learning outcomes and their interrelationship with assessment approaches by expanding upon the revised Bloom’s Taxonomy (Anderson et al., 2001; Iowa State University, 2012).

The proposed framework not only removes the burden of aligning potential Assessment Measures to Bloom’s levels and Knowledge Dimensions, but also serves as a useful quick reference tool when generating ideas of how to assess a learning outcome by closing the gap between assessment theory and practice. Further, it will prove useful to academic leadership when establishing program and course learning outcomes as it offers a visual translation of outcomes to potential Assessment Measures: a snapshot of how scaffolded learning may manifest and an opportunity to make adjustments so students receive well-rounded exposure to learning experiences. In the absence of this tool, one may design a learning outcome without considering its Knowledge Dimension and implications for potential Assessment Measures.

The proposed theory to practice assessment framework is illustrated below in Appendix C. The two leftmost columns represent the original Cognitive and Knowledge Dimension matrix (Anderson et al., 2001). For each Bloom’s level and Knowledge Dimension, an expanded list of potential assessment objectives is presented in the Goals of Assessment column. The rightmost columns list potential Assessment Measures and distinguish if they can be automatically graded with limited to no faculty involvement or if a more extensive faculty evaluation is necessary. The Goals of Assessment are not comprehensive but provide additional context to facilitate interpretation of the original description in parentheses in the Knowledge Dimension column. Likewise, the Assessment Measures are not comprehensive as these are limited only by the imagination of faculty and course designers; they represent some of the most common assessment vehicles. Blank cells suggest an Assessment Measure is not readily available or likely an inferior or unreasonable approach compared to those in the corresponding column. For instance, it would be inefficient and offer limited upside to assess Remember Procedural (Recall) through a written exam when a multiple choice test accomplishes the same goal just as effectively.

An inherent challenge in creating the framework was overcoming the sense of boundaries set by the Knowledge Dimension (Anderson et al., 2001). The pliable application of verb choice to multiple Bloom’s levels, although appropriate, is at first glance confounding and requires the reader to consider the full context of the learning outcome in addition to the verb to decipher intent. To demonstrate this point, consider how the verb Compare appears at three different Cognitive Process Dimension levels in the Revised Bloom’s Taxonomy Action Verbs (Anderson & Krathwohl, 2001). Conversely, the appearance of Predict as a descriptor for the Metacognitive dimension of Understand as well as level 4 of Understand in the Cognitive Rigor Matrix, while germane to these contexts, tacitly undermines its application and use as a word to describe higher order predictive modeling and research skills (Anderson et al., 2001; Hess et al., 2009). While these examples may appear to be contradictions within existing theories, the reality is they are not illustrated alongside their context-specific Assessment Measures to resolve questions about their applicability in practice as they are in the proposed framework. Therefore, we should not restrict ourselves to a literal interpretation of the suggestions and language either in the aforementioned theories or in this proposed theory to practice assessment framework.

The proposed framework illuminates interconnections between learning theory, assessment, goals, and their associated measures. It should serve as a source of inspiration for program designers, an assessment roadmap for faculty and course designers, and as a way for assessment practitioners to validate the learning level alignment of learning outcomes and assessments. While this framework attempts to provide an initial platform to plainly bridge previously implicit theory to practice, it will need to undergo continual evolution as new scenarios and discipline-specific exceptions are uncovered.

Author

Dr. Eric Page is a Senior Assessment Manager at the University of Phoenix and Associate Faculty in their Doctor of Education program. Prior experience across higher education includes serving as Director of Retention and Assessment, Associate Program Director of General Education, and over a decade of teaching at the undergraduate and graduate levels. He also volunteers as a peer reviewer for the Journal of Higher Education Management. His educational credentials include an Ed.D in Higher and Postsecondary Education from Argosy University, a M.S. in School Counseling from SUNY Albany, and a B.A. in Psychology from SUNY Geneseo.

Dr. Eric Page is a Senior Assessment Manager at the University of Phoenix and Associate Faculty in their Doctor of Education program. Prior experience across higher education includes serving as Director of Retention and Assessment, Associate Program Director of General Education, and over a decade of teaching at the undergraduate and graduate levels. He also volunteers as a peer reviewer for the Journal of Higher Education Management. His educational credentials include an Ed.D in Higher and Postsecondary Education from Argosy University, a M.S. in School Counseling from SUNY Albany, and a B.A. in Psychology from SUNY Geneseo.

References

Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing, abridged edition. Boston, MA: Allyn and Bacon.

Anderson, L. W., Krathwohl, D. R., Airasian, P. W., Cruikshank, K. A., Mayer, R. E., Pintrich, P. R., Raths, J., & Wittrock, M. C. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives. New York: Longman.

Bloom, B.S. (1956). Taxonomy of Educational Objectives, Handbook: The Cognitive Domain. David McKay, New York.

Hess, K. K., Jones, B. S., Carlock, D., & Walkup, J. R. (2009). Cognitive Rigor: Blending the Strengths of Bloom’s Taxonomy and Webb’s Depth of Knowledge to Enhance Classroom-Level Processes. Online Submission

Iowa State University. (2012). A model of learning objectives: A taxonomy for learning, teaching, and assessing: A revision of bloom’s taxonomy of educational objectives. Iowa State University Center for Excellence in Learning and Teaching. https://www.learningoutcomesassessment.org/wp-content/uploads/2019/10/RevisedBloomsHandout.pdf

Webb, N. (1997). Research Monograph Number 6: “Criteria for alignment of expectations and assessments on mathematics and science education. Washington, D.C.: CCSSO.

Webb, N. (August 1999). Research Monograph No. 18: “Alignment of science and mathematics standards and assessments in four states.” Washington, D.C.: CCSSO.

Appendix

Appendix A

Appendix B

Appendix C

No comments yet.